Objective

Interpretable machine learning refers to giving explanations of machine learning/deep learning models to humans with domain knowledge. The explanation to the humans should be comprehensible in (i) natural language (ii) easy to understand representations and the explanation to the domain knowledge should make sense to a domain expert

NEED

As Healthcare is not a standard manufactured product and a patient is not a simple widget in a manufacturing process line. Each patient has needs unique to his or her

- physiology,

- genetics,

- social circumstances

- and other characteristics,

what ever application /Models we built it should have proper interpretability capabilities to provide clear explanation in both modelling as well as clinical(domain) prospective.

Components of interpretability

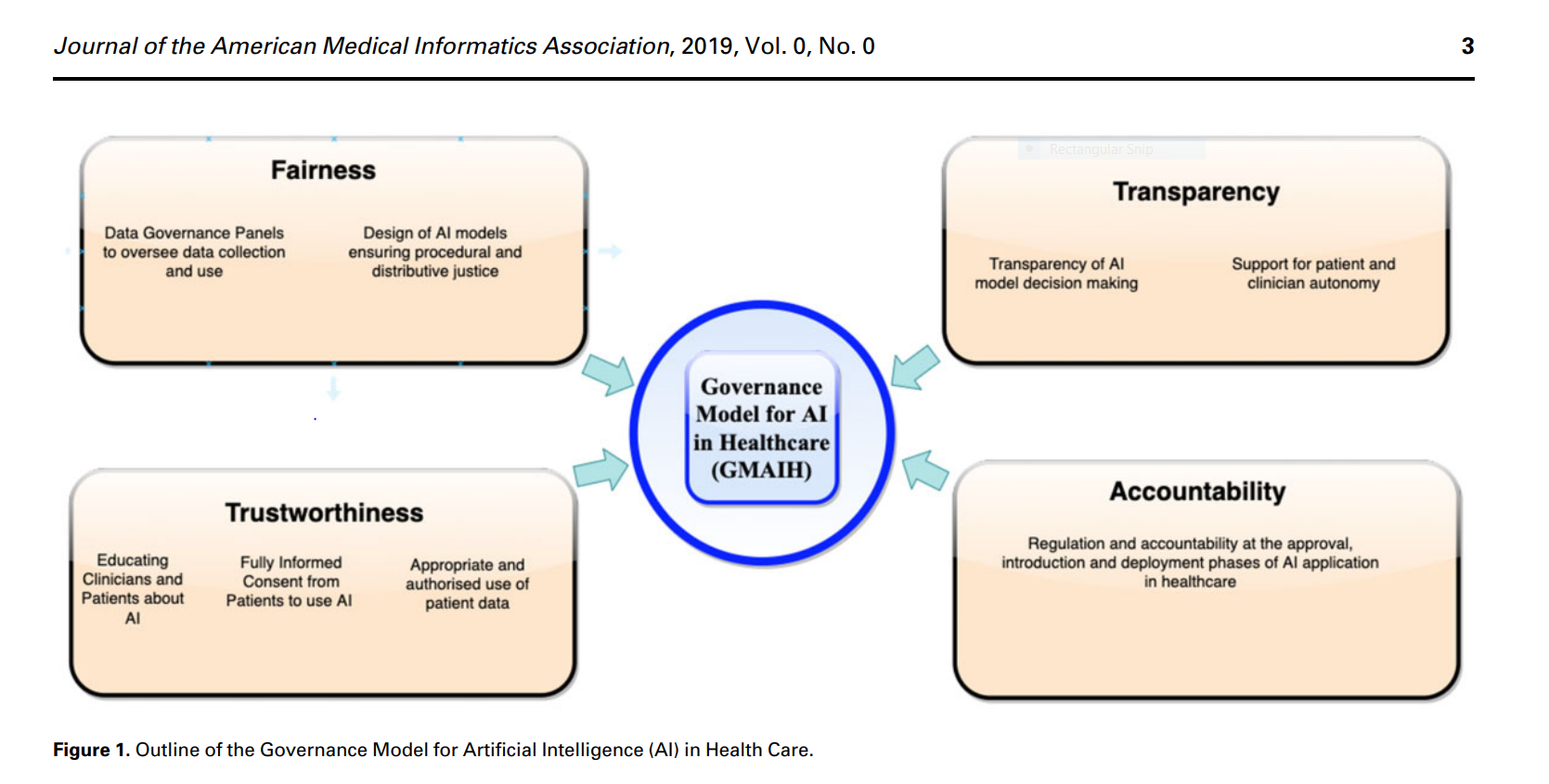

As per the “A governance model for the application of AI in health care (GMAIH) by Sandeep Reddy, 1 Sonia Allan,2 Simon Coghlan,3 and Paul Cooper” Any model which build using EHR should have the below mentioned four characteristics. They are

1) Fairness

2)Transparency

3)Trustworthiness

4) Accountability

Source : https://www.researchgate.net/publication/337012288

Fairness

As per Wikipedia fairness machine learning means, a given algorithm is said to be fair, or to have fairness if its results are independent of given variables, especially those considered sensitive, such as the traits of individuals which should not correlate with the outcome (i.e. gender, ethnicity, sexual orientation, disability, etc.).

When it comes to Fairness in modelling, the models should not bias (In terms of repressing the data) and they need to address the confounding factors as well. Hence there is need of collaboration between AI experts, clinical experts and legal expert required to design the model for its fairness in all the directions (AI person responsible for modes accuracy items of data and Clinical expert will see the applicability of the models)

Transparency

When it comes Transparency, the models should able to provide the clear explanation (Interpretation) to the end uses how they are working by this we can increase the trustworthiness of the users. As Explainable Machine Learning/Deep learning (XAI) is emerged as one of the key innovation whatever models (either linear or black box models) we built we are able to provide the global and local explanations using these XAI techniques (Like LIME, SHAP. Etc)

Trustworthiness

When it comes trustworthiness, with the proper privacy and security norms we can increase the Trustworthiness of the patients and by educating the clinicians on some of the fundamentals of AI/ML we can increase clinician’s trustworthiness similarly by learning some clinical domain the AI professionals. all these help the models to be more trustworthy.

Accountability

Governments should have the clear regularities in place in order to audit and approve the models in terms of the above characteristics (such as Fairness, Transference, Trustworthy) before deploy in healthcare settings.