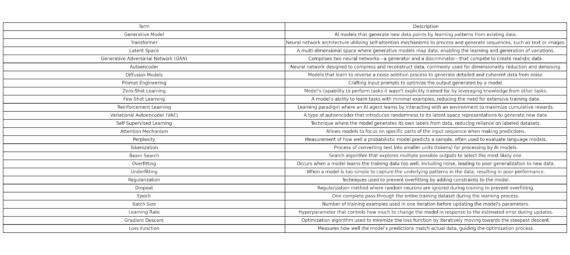

1. Generative Model: AI models that can generate new data points by learning patterns from existing data.

2. Transformer: A neural network architecture utilizing self-attention mechanisms to process and generate sequences, such as text or images.

3. Latent Space: A multi-dimensional space where generative models map data, facilitating the learning and generation of variations.

4. Generative Adversarial Network (GAN): Consists of two neural networks—a generator and a discriminator—that compete to create realistic data.

5. Autoencoder: A neural network designed to compress and reconstruct data, commonly used for dimensionality reduction and denoising.

6. Diffusion Models: Models that learn to reverse a noise addition process to generate detailed and coherent data from noise.

7. Prompt Engineering: Crafting input prompts to optimize the output generated by a model.

8. Zero-Shot Learning: The capability of a model to perform tasks it wasn’t explicitly trained for by leveraging knowledge from other tasks.

9. Few-Shot Learning: A model’s ability to learn tasks with minimal examples, reducing the need for extensive training data.

10. Reinforcement Learning: A learning paradigm where an AI agent learns to make decisions by interacting with an environment to maximize cumulative rewards.

11. Variational Autoencoder (VAE): A type of autoencoder that introduces randomness to its latent space representations to generate new data.

12. Self-Supervised Learning: A technique where the model generates its own labels from the data, minimizing reliance on labeled datasets.

13. Attention Mechanism: Allows models to focus on specific parts of the input sequence when making predictions.

14. Perplexity: A measurement of how well a probabilistic model predicts a sample, often used to evaluate language models.

15. Tokenization: The process of converting text into smaller units (tokens) for processing by AI models.

16. Beam Search: A search algorithm that explores multiple possible outputs to select the most likely one.

17. Overfitting: When a model learns the training data too well, including noise, leading to poor generalization to new data.

18. Underfitting: When a model is too simple to capture the underlying patterns in the data, resulting in poor performance.

19. Regularization: Techniques used to prevent overfitting by adding constraints to the model.

20. Dropout: A regularization method where random neurons are ignored during training to prevent overfitting.

21. Epoch: One complete pass through the entire training dataset during the learning process.

22. Batch Size: The number of training examples utilized in one iteration before updating the model’s parameters.

23. Learning Rate: A hyperparameter that controls how much to change the model in response to the estimated error each time the model’s weights are updated.

24. Gradient Descent: An optimization algorithm used to minimize the loss function by iteratively moving towards the steepest descent.

25. Loss Function: A function that measures how well the model’s predictions match the actual data, guiding the optimization process.

By familiarizing yourself with these terms, you can build a solid foundation in generative AI, enabling you to navigate and contribute effectively to this dynamic field.

Referance Document

https://medium.com/ai-in-plain-english/top-25-generative-ai-terminologies-you-must-know-6a3bb0300988