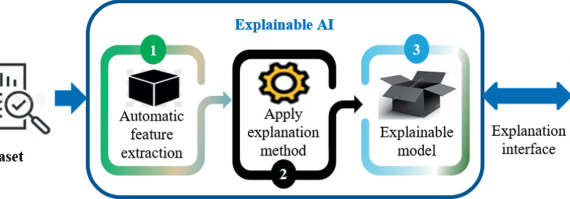

Explainable AI (XAI) refers to a set of techniques and approaches used to help humans understand how machine learning and artificial intelligence systems make decisions. There are two main types of XAI methods: model-agnostic and model-specific. In this blog post, we will explore these two types of XAI methods, along with some examples of each.

Model-Agnostic XAI

Model-agnostic XAI methods are techniques that can be applied to any machine learning model, regardless of its architecture or training algorithm. These methods are designed to provide general explanations of how a model works, without requiring knowledge of the model’s internal workings.

Some examples of model-agnostic XAI methods include:

LIME (Local Interpretable Model-Agnostic Explanations): LIME is a technique that generates local explanations for a machine learning model. LIME works by generating a simplified model that approximates the behavior of the original model for a specific input.

SHAP (SHapley Additive exPlanations): SHAP is a technique that provides feature importance scores for a machine learning model. SHAP works by computing the contribution of each feature to the prediction for a given input, taking into account all possible combinations of feature values.

Anchors: Anchors are human-friendly, easily understandable rules that capture the behavior of a machine learning model for a specific input. Anchors work by generating a set of if-then rules that approximate the decision boundary of the model.

Model-Specific XAI

Model-specific XAI methods are designed to provide explanations for specific types of machine learning models. These methods are often more accurate and specific than model-agnostic methods, but they require knowledge of the internal workings of the model.

Some examples of model-specific XAI methods include:

Decision trees: Decision trees are a type of model-specific XAI method that provide a graphical representation of the decision-making process used by the model. These trees can be used to trace the decision path from the input data to the output prediction, making it easier to understand the model’s decision-making process.

Gradient boosting feature importance: Gradient boosting models are a type of machine learning model that can be explained using feature importance techniques. These techniques work by computing the contribution of each feature to the prediction for a given input.

Integrated Gradients: Integrated Gradients is a technique that provides feature importance scores for deep learning models. It works by computing the integral of the gradients of the model’s output with respect to the input features.

Conclusion

Both model-agnostic and model-specific XAI methods have their strengths and weaknesses, and the choice of XAI technique will depend on the specific problem being addressed and the type of machine learning model being used. Model-agnostic XAI methods are generally more flexible and can be applied to any type of model, but they may not provide the same level of accuracy and specificity as model-specific methods. Model-specific XAI methods, on the other hand, can provide more accurate and specific explanations, but they require knowledge of the internal workings of the model.