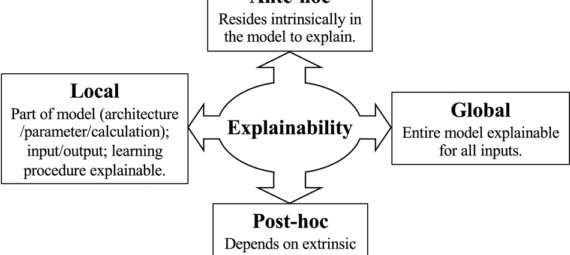

Explainable AI (XAI) is becoming increasingly important in today’s world of machine learning and artificial intelligence. As these technologies are increasingly used to make critical decisions, it is essential that they are transparent and understandable to ensure their decisions are fair and unbiased. In this blog post, we will explore the different methods used to find explainable AI solutions.

Feature importance analysis

Feature importance analysis involves identifying the features or input variables that are most important in determining the output of a machine learning model. By understanding which features are most important, it is possible to gain insight into how the model is making its predictions. Feature importance analysis can be used for both linear and non-linear models and can help to identify the key drivers of a model’s behavior.

Local interpretability

Local interpretability involves analyzing individual predictions or decision points made by a machine learning model. By examining how the model arrived at a specific prediction, it is possible to gain insight into the model’s decision-making process. Local interpretability methods include techniques such as LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations), which generate local explanations for individual predictions.

Global interpretability

Global interpretability involves analyzing the overall behavior of a machine learning model. By examining how the model performs across a range of inputs, it is possible to gain a more comprehensive understanding of how the model works. Global interpretability methods include techniques such as Partial Dependence Plots (PDPs) and Accumulated Local Effects (ALE) plots, which can help to visualize how the model’s output changes as input variables are varied.

Rule-based systems

Rule-based systems involve using rule-based systems to model the behavior of a machine learning model. By generating a set of rules that describe how the model makes its predictions, it is possible to provide a more understandable explanation of the model’s decision-making process. Rule-based systems can be particularly useful for decision trees and random forests, as they are already structured as a set of rules.

Simplified models

Simplified models involve creating simplified models that approximate the behavior of a machine learning model. By using these simplified models to generate explanations, it is possible to provide more understandable explanations of the model’s decision-making process. Simplified models can take the form of linear models or decision trees, and can be particularly useful for complex models that are difficult to explain.

Counterfactual analysis

Counterfactual analysis involves identifying what changes to the input data would be required to change the output of a machine learning model. By examining these counterfactual scenarios, it is possible to gain insight into how the model works and why it is making its predictions. Counterfactual analysis can be particularly useful for identifying cases where a model’s behavior is unexpected or where it is biased.

In conclusion, there are several methods used to find explainable AI solutions, and the choice of method will depend on the specific problem being addressed and the type of machine learning model being used. By understanding these methods and applying them appropriately, it is possible to generate transparent and understandable machine learning models that can be trusted to make fair and unbiased decisions.