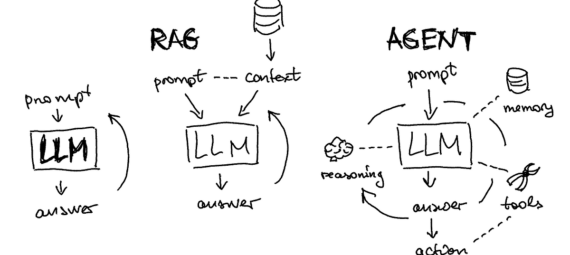

In today’s information-driven world, technology is evolving rapidly to help us manage, understand, and act on the overwhelming volume of data available. Among the most transformative advancements are Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), and autonomous agents. But how do these technologies differ, and when should you use each? In this blog post, we’ll explore the distinctions between LLMs, RAG, and agents, highlighting their core functionalities, strengths, and the best scenarios to use them.

Large Language Models (LLMs)

LLMs are powerful machine learning models trained on vast amounts of text data to understand, generate, and interact in a human-like manner. You might be familiar with popular LLMs like GPT-3 or GPT-4, which have been used in applications ranging from creative writing and content generation to chatbots and customer support.

Core Functionality

The main strength of LLMs lies in their ability to generate fluent and contextually relevant responses. They excel at understanding language nuances, providing creative output, and holding natural conversations.

Strengths and Weaknesses

LLMs are incredible at generating content that is contextually rich, engaging, and creative. However, they are limited by the static nature of their training data. This means that their knowledge is based on information available up to a certain point and may become outdated, potentially resulting in factually incorrect or outdated responses.

Best Use Scenarios

LLMs shine in scenarios where creativity and human-like interaction are needed—think interactive Q&A systems, automated creative writing, or customer support that relies on an engaging tone.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) takes LLMs one step further by enhancing them with a retrieval mechanism. This retrieval component allows RAG systems to pull relevant information from external databases, the internet, or specialized knowledge bases to enhance the accuracy and relevance of responses.

Core Functionality

RAG combines the strengths of LLMs with real-time data retrieval. It augments LLM-generated responses by pulling in additional information to ensure accuracy and recency. This makes RAG particularly effective when precise and up-to-date information is critical.

Strengths and Weaknesses

The primary strength of RAG is its ability to provide accurate and relevant responses based on real-time data retrieval. This makes it a better choice for applications that demand high levels of accuracy or involve dynamic topics. However, RAG is dependent on the quality of the retrieval process and the availability of reliable data sources.

Best Use Scenarios

RAG is best used when factual accuracy and recency are crucial. For example, FAQ assistants that provide up-to-date information from trusted databases or chatbots answering questions about ongoing events would greatly benefit from this technology.

Agents

Agents are autonomous programs designed to perform specific tasks based on the instructions they receive. While LLMs and RAG focus on understanding and generating human-like language, agents are action-oriented and are often used in combination with LLMs to complete structured tasks.

Core Functionality

Agents are capable of executing commands, automating workflows, and performing actions like interacting with APIs, processing data, or triggering other services. They often work in tandem with LLMs, which provide the language-based understanding and direction that guide the agents’ actions.

Strengths and Weaknesses

The key strength of agents is their ability to efficiently execute specialized tasks, particularly when these tasks are well-defined. Agents excel at interacting with databases, automating actions, or performing data processing. However, they lack the language comprehension and generative capabilities that LLMs have and thus rely heavily on LLMs for interpretation.

Best Use Scenarios

Agents are ideal for automating workflows or executing specific tasks that need structure and coordination. Examples include automating email sending, database updates, or performing data analysis based on LLM-derived insights. When combined with LLMs, agents form a powerful tool for task automation—like managing routine activities or interacting with software systems.

Summary: Choosing the Right Tool for the Right Job

– **LLMs** are invaluable for generating language-rich content and holding engaging conversations, but their static knowledge may limit them in providing real-time, accurate responses.

– **RAG** enhances LLMs by adding a dynamic retrieval component, allowing for accurate, up-to-date information. This makes it ideal for situations where factual precision is key.

– **Agents** excel at executing tasks and automating workflows, especially when combined with LLMs for language-based guidance. They focus on getting things done efficiently and effectively.

| Feature/Aspect | Large Language Models (LLMs) | Retrieval-Augmented Generation (RAG) | Agents |

| Definition | Machine learning models trained on large text data to understand and generate human-like responses. | Combines LLMs with retrieval mechanisms to pull in relevant information from external sources. | Autonomous programs designed to execute specific tasks, often collaborating with LLMs. |

| Core Functionality | Text generation, language understanding, conversation. | Enhance LLM responses by retrieving additional context or knowledge. | Execute structured actions, automate workflows, perform specific tasks. |

| Context Handling | Relies on pre-trained knowledge, which may become outdated. | Augments responses with real-time or domain-specific information. | Executes commands or tasks based on current context, often guided by LLMs. |

| Use Cases | Chatbots, automated customer support, content generation. | FAQ assistants, chatbots providing precise data. | Data analysis, task automation, API interactions. |

| Interaction with Data | Limited to what the model has learned (static). | Uses retrieval to dynamically pull relevant data. | Can interact with databases, APIs, and external systems. |

| Strengths | Fluent, creative responses, language-rich content. | Provides accurate, up-to-date information. | Efficient at executing specialized tasks, good at automating workflows. |

| Weaknesses | May produce outdated or incorrect information. | Dependent on quality of retrieval and data availability. | Relies on LLMs for complex language understanding. |

| Best Use Scenarios | Creative writing, conversational AI, interactive Q&A. | Factual answers with real-time data. | Automating workflows, interacting with software systems. |

Example Use Cases

Imagine you need a chatbot to answer questions about a recent event. An LLM alone might not suffice, as it may not have the latest information—this is where **RAG** can come in, fetching updated details and providing informed responses. Now, if you need to automate a process based on user inputs, like sending data to an external system or updating a database, you might leverage an **agent** working in tandem with an LLM to get the job done seamlessly.

In today’s era of information overload, understanding the capabilities of LLMs, RAG, and agents—and how they can work together—is key to building systems that are both smart and actionable. By leveraging the right combination of these tools, you can achieve a balance of human-like interaction, real-time information accuracy, and efficient task automation.