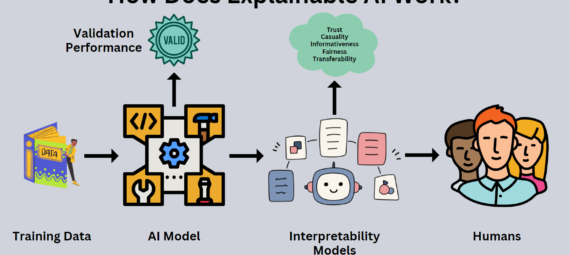

Introduction: In the ever-evolving landscape of marketing, the ability to communicate precisely with the right client at the right moment is a strategic imperative for any company. The key to success lies in personalized strategies that encourage conversion, engagement, and resonance. Achieving these demands involves a multi-faceted strategy, including data analysis for optimal touchpoints, customer segmentation for tailored messaging, and predictive analytics to anticipate needs. However, the interpretability of models is crucial for refining strategies and aligning them with audience preferences. This article explores Explainable Artificial Intelligence (XAI) methods, focusing on various techniques designed to improve the interpretability of machine learning models

Interpretability Methods:

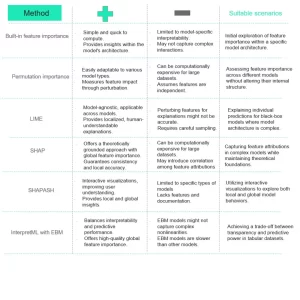

Built-in Feature Importance:

Overview: XGBoost embeds a feature importance function to determine the relevance of features in predicting the target. This is crucial for feature selection, interpretation, and assessing the influence of features on predictions.

Pros and Cons: Gain, Weight, and Cover are different importance types with subtle differences. Understanding these types is essential for comprehensive insights into feature importance.

Permutation Importance:

Overview: This method gauges feature significance by shuffling feature values and observing the resulting impact on model performance, revealing the feature’s contribution to the relationship with the target.

Pros and Cons: Permutation importance provides a straightforward way to assess feature importance but might be computationally expensive for large datasets.

LIME (Local Interpretable Model-agnostic Explanations):

Overview: LIME explains complex machine learning model predictions locally by approximating with a simplified “local” model. It provides explanations for why specific predictions were made.

Pros and Cons: LIME is model-agnostic and provides local interpretability, but it may not capture global model behavior.

SHAP (SHapley Additive exPlanations):

Overview: SHAP employs cooperative game theory’s Shapley value to explain machine learning predictions. It assigns contributions to individual features for a given prediction.

Pros and Cons: SHAP offers a strong theoretical foundation, providing both global and local interpretability. However, it can be computationally intensive for large datasets.

SHAPASH:

Overview: SHAPASH simplifies machine learning models’ interpretability for tabular data, built on the SHAP framework. It attributes feature contributions for predictions with an interactive visual interface.

Pros and Cons: SHAPASH provides both global and local interpretability with a user-friendly interface, enhancing transparency and comprehension in model predictions.

InterpretML/EBM (Explainable Boosting Machines):

Overview: InterpretML is a Python library offering a unified interface for model interpretation, including EBM. EBM is a transparent model that strikes a compromise between accuracy and interpretability.

Pros and Cons: EBM models, while slightly less accurate than some counterparts, excel in intelligibility, offering both global and local insights.

Conclusion: This comprehensive exploration of interpretability methods for machine learning models in marketing underscores the importance of transparency and accountability. Each method brings unique advantages to the table, offering practitioners a diverse set of tools to balance prediction accuracy and model transparency. As the field of Explainable Artificial Intelligence continues to evolve, integrating these methods into marketing strategies ensures ethical and responsible AI development, empowering companies to make informed and customer-centric decisions.

Source

https://heka-ai.medium.com/scoring-models-interpretability-explainable-ai-applied-to-churn-prediction-fbcd861c32ab