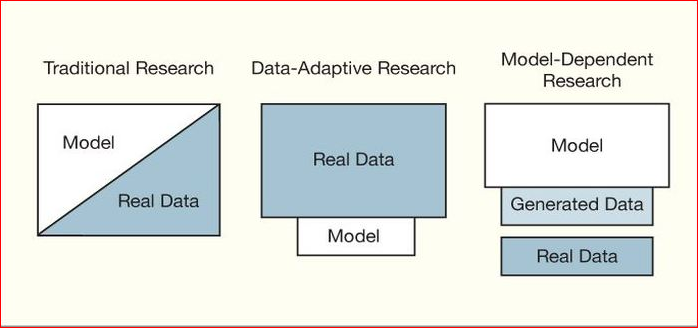

Over the last decade there has been a substantial increase of the volume and complexity of the data we are collecting and storing. Now we see an increasing demand for real time data processing – a continuous process of input / output of data. Accordingly, the approach to data analysis and data processing has changed over the last decade as well. We are witnessing a move from a traditional static data approach to a more adaptive / model-dependent approach.

The traditional research approach and statistical inference begins with the specification of a theory or a model. Classical or Bayesian methods such as regression models, multivariate data analysis models and time series models are used. The model building process involves fitting the model to the data and checking model accuracy with diagnostics.

In an adaptive approach the starting point is the data itself, the machine searches through data (on the Web, in in-house data, you name it) to identify useful predictors. The methods that fall into this category are e.g. neural networks, random forest, decision trees, support vector machines, bagging and boosting methods. Their beauty is that hardly any theory or hypothesis is needed prior to running the analysis. That’s the world of machine learning also called data mining. The reason we use ‘adaptive’ is because the methods adapt to the available data. They are of nonlinear relationships and interactions among variables. In short, it’s the data that determines the model.

The model-dependent research is a third approach. It begins with the specification of a model and then it’s used to generate data, predictions, or recommendations. Simulations and mathematical programming methods are well-known examples for that kind of research. When employing a model-dependent approach, model accuracies are improved by comparing generated data with real data. When the amount of data is small and not heterogeneous, we employ the traditional approach. In case of large data sets with many predictors, data adaptive techniques are far better. The model based approaches are mostly used for ‘trade off’ (conjoint, maximum differentiation) studies.

In many cases it does make sense and works best to use a combination of all of these approaches within one single study. It all depends on the way the problem is defined and architected.

(Source: Modelling Techniques in Predictive Analytics, Thomas W. Miller, Pearson Education Inc., Upper Saddle River, New Jersey, 2014- pgs .3-5)