In my lens Analytics/ML/Data science whatever you call, it is always lies between exploration and explanation. Always the aim is to understanding the business problem and then converts that problem into data problem through the proper data exploration (with problem statement, Domain and data quality prospective using data literacy skills) and then build the model , once we build the model the model has to be validate both internally /externally .Here internally means modelling prospective and externally means it is the explanation or interpretability of the model in terms of business statement and its applicability. As a practitioner for me the interpretability/ Explanation is very critical through which the client/customer need to act on it to address the business problem.

That’s’ the reason most of the times in my carrier as much as possible I try to fit the statistical models, which are inferential in nature and provide the statistical significance for findings, this makes my job is easy to provide the explanation to the customers as the models are in linear nature and have some kind of benchmark (significance) for findings.

But with the developments in the learning based approach , there have been lot of Machine Learning/ Deep Learning algorithms emerged and doing really good jobs for fitting the models for even complex data but they lack of providing the clear explanation , Therefore as a practitioner I always compromise on explanation whenever I use these learning based approach. But I somehow combined these two approaches and providing solutions based on that formulation, typically I use learning based approach algorithms for feature selection and the use inferential methods for final model building which are easy to interpret.

But Lately, We have seen some developments in the model interpretation, there were some new methods like LIME,SHAP emerged which can provide interpretation/ Explanation at an overall level (Global Interpretation) as well as at an respondent level (Local Interpretation) irrespective of the model, these approaches are called Model Agnostic approaches. Because of this now I can use any complex model to fit the data (either NN/Random Forest. XG Boost..Etc.) and use these explanation methods on top of it which can give some kind of explanation. With this approach I don’t need to compromise either on accuracy or on Interpretability.

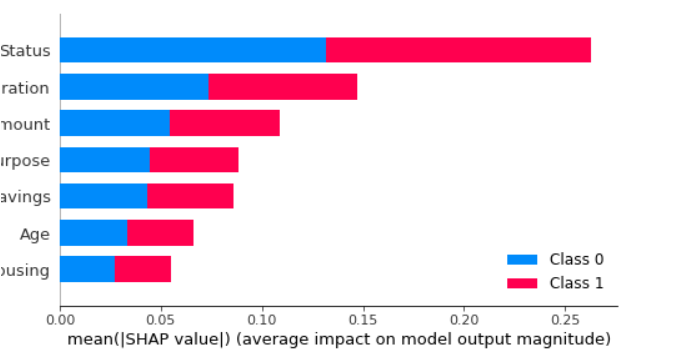

The below is the case study where I have applied the Complex model and with SHAPE method I also provide explanation at both global as well as at local level why the model took that decision.

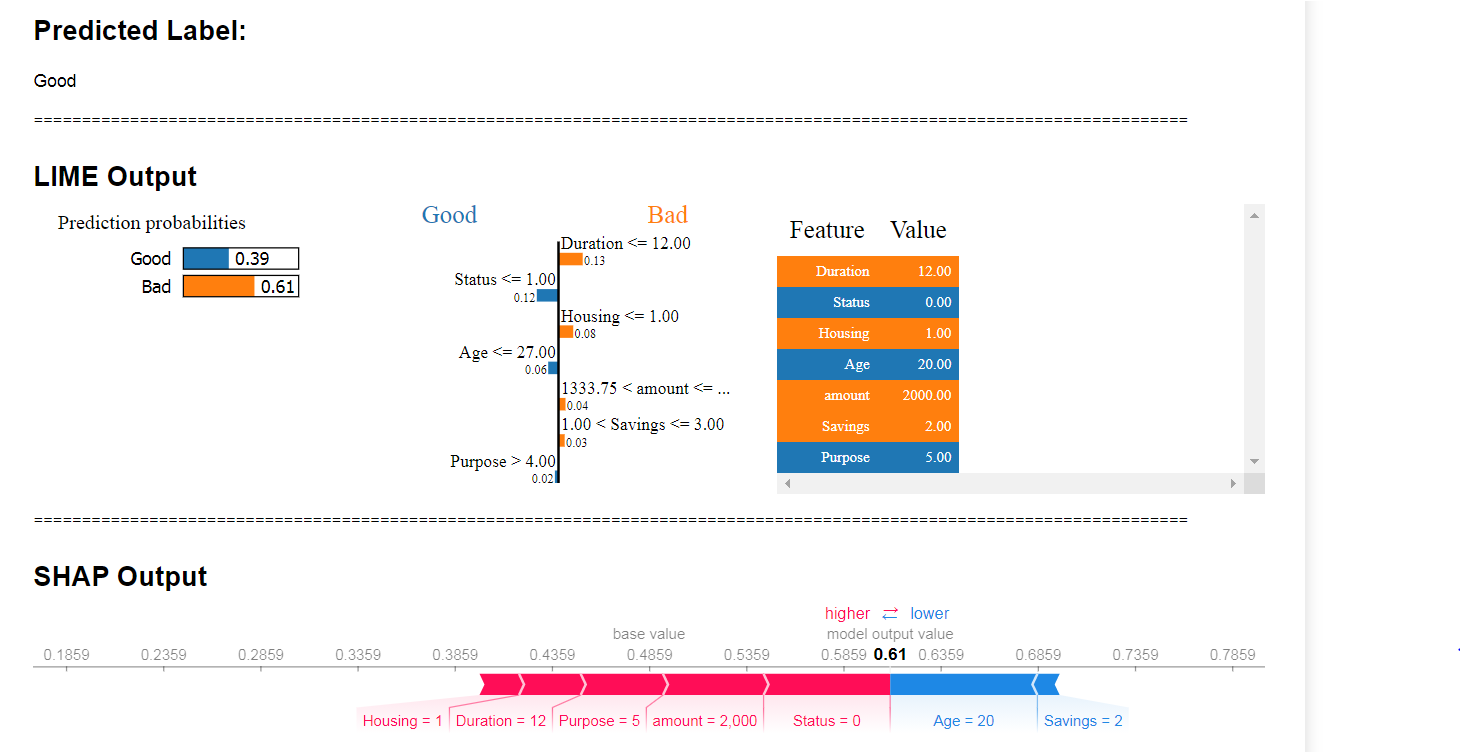

And also for new respondent once we run the model we will also get the prediction and also get the explanation like below why the model took that decision.