With over 15yrs of experience in the analytics industry, I have seen the transformation it has undergone; on some aspects I would say that the change has been drastic, whereas on some other aspects, not much in terms of the quantum of change. Let me try explaining this through the 3 main components of analytics (or any process for that matter any)

• the input (data) – data in terms of the volume, variety and veracity

• the processing (tools/algorithms/ modelling)

• the output (how it is being consumed/ used)

I could break this down to 3 major phases; I would call them the ‘3 major revolutions in analytics’

The first that I saw was from 2003-2006. In terms of data, the datasets were small, mostly collected through primary research. The modelling was axiom based i.e. rule or formula based. Therefore they were validated through statistical significance. Traditional statistical tools like SPSS, SAS, MATLAB were used. The models were aggregated in nature (The model will consider all the people data together)models

Next three years (2006-2009), I have seen the rise of experimental or evidence based analytics where data was simulated around an experiment and models were based around that simulated data. Bayesian approaches and Markov chains gained a lot of prominence during this era. Markov processes are the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability distributions, and have found extensive application in Bayesian statistics. Models could now be built at an individual level. These individual level estimates were the basis for deriving group level aggregated estimates. The datasets by now had drastically gone up in terms of Volume, but we were still talking of structured data.

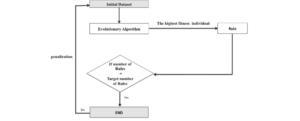

2009 onwards was all about observational analytics (‘learning’ from data based approaches) by virtue of AI/ML algorithms. Huge technological advances were being made because of this very active open source community, there were a lot of algorithms for structured and

Unstructured data using AI/ML and DL.

We have lately been seeing a focus on model interpretability. We are now in the era of LIME, no black box models anymore! It is about decrypting your model. LIME (Local Interpretable Model-agnostic Explanations) a novel explanation technique that explains the prediction of any classifier in an interpretable and faithful manner by learning a interpretable model locally around the prediction.

To conclude this, I would say that the nature of data has changed drastically- datasets have become huge (business processes generated data) getting generated real time (sensor data) through multiple sources (social media); this led to a significant modification in pre-processing, the tools and algorithms have changed but the essential core of what we are trying to do with the data remains unchanged which is classification, association, regression and clustering! It is like the same product repackaged and rebranded