NEED

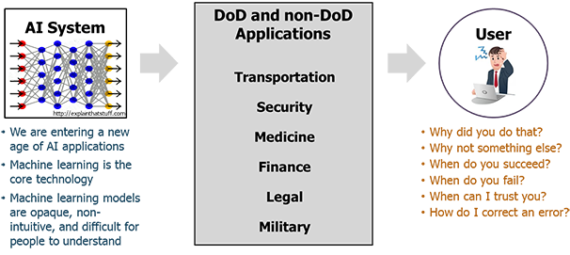

In recent years, the healthcare industry has witnessed the emergence of Artificial Intelligence (AI) and Machine Learning (ML) technologies, which are revolutionizing the way healthcare providers operate. These technologies enable healthcare providers to collect and analyze large amounts of patient data, providing insights and predictions that can improve patient outcomes and reduce costs. However, while these models have shown significant promise, they have also raised concerns around explainability and transparency, particularly when it comes to the unique needs of each patient.

At Medeva, we recognize that patients are not simple widgets on a manufacturing process line, and that each patient has unique needs and characteristics that must be taken into account when building predictive models. As a result, any model we build must go through the components of interpretability, including fairness, transparency, trustworthiness, and accountability, as outlined in the “A governance model for the application of AI in healthcare” (GMAIH) by Sandeep Reddy, Sonia Allan, Simon Coghlan, and Paul Cooper.

As a standard practice, we always focus on model explainability alongside model performance. We accomplish this by building various model-agnostic or model-specified Explainable AI (XAI) models, and providing clear explanations to end-users on how the model arrived at a particular decision. This includes providing predictions as well as the reasons behind those predictions, even for new patients.

The pipeline behind this approach involves building both model-specific and model-agnostic XAI techniques. For linear models, we apply model-specific XAI algorithms and provide global as well as local explanations. For non-linear or black-box models, we use model-agnostic techniques such as LIME, SHAP, and others to provide both global and local explanations. In some cases, we also use Explainable Boosting algorithms for local and global explanations.

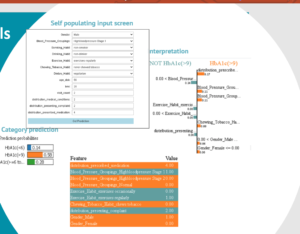

Here is an example of how our approach can provide actionable insights for healthcare providers:

Suppose we want to predict the risk assessment of HBA1C based on vitals, social/family histories, and treatments. Our model not only predicts the risk assessment, but also provides transparency on the reasons for that prediction.

For example, if a patient is slotted in the high HBA1C category, the model will also show that this is due to high blood pressure, smoking, and a non-vegetarian diet. These insights allow for greater actionability and more meaningful interventions by healthcare providers.

In conclusion, explainability is a key feature of healthcare models, as patients have unique needs and characteristics that cannot be overlooked. At Medeva, we prioritize explainability alongside model performance, building model-specific and model-agnostic XAI techniques that provide clear explanations to end-users. By doing so, we enable healthcare providers to make more informed decisions and provide better care to their patients.